.NET Core on Mac OS X

After MSFT started to open source .NET Core, it eventually found its way on my Mac OS as well.

The easiest way seams to be installing the .NET Execution Environments using Homebrew based the instruction given at Github.

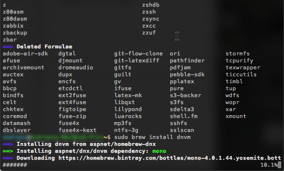

sudo brew tap aspnet/dnx sudo brew update sudo brew install dnvm

Afte registering dmvm via

source dnvm.sh

one now should be abel to install .NET core using the following dnvm commands

dnvm upgrade -u sudo dnvm install latest -r coreclr -u

For whatever reason I permanently run into issues such as

Installing to /Users/andreas/.dnx/runtimes/dnx-mono.1.0.0-beta6-12004 find: /Users/andreas/.dnx/runtimes/dnx-mono.1.0.0-beta6-12004/bin/: No such file or directory chmod: /Users/andreas/.dnx/runtimes/dnx-mono.1.0.0-beta6-12004/bin/dnx: No such file or directory

First of all, I tried tried to update dmvm itself and again run into issueS:

foo@mac-pr:~/.dnx$ dnvm update-self ~/.dnx/dnvm/dnvm.sh doesn't exist. This command assumes you have installed dnvm in the usual location and are trying to update it. If you want to use update-self then dnvm.sh should be sourced from ~/.dnx/dnvm andreas@mac-pro:~/.dnx$

Trying so create the missing folders and links manually

sudo mkdir ~/.dnx/dnvm; sudo ln -s /usr/local/Cellar/dnvm/1.0.0-dev/bin/dnvm.sh ~/.dnx/dnvm/dnvm.sh

as well as sourcing dnvm.sh directly from the ~/.dnx/dnvm location did not help.

Every time running

dmvm update-self

ended up in something like

Downloading dnvm.sh from https://raw.githubusercontent.com/aspnet/Home/dev/dnvm.sh Warning: Failed to create the file /Users/andreas/.dnx/dnvm/dnvm.sh: Warning: Permission denied

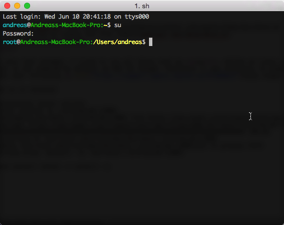

As very last attempt, I tried to run all these stuf as su. Unlike on Linux systems, root is not enabled by default on MAC OS though. Therefore it was necessary to enable the root user following these steps.

Now you can run su in any terminal, though.

Determining latest version Latest version is 1.0.0-beta6-12004 Downloading dnx-mono.1.0.0-beta6-12004 from https://www.myget.org/F/aspnetvnext/api/v2 Download: https://www.myget.org/F/aspnetvnext/api/v2/package/dnx-mono/1.0.0-beta6-12004 ######################################################################## 100.0% Installing to /var/root/.dnx/runtimes/dnx-mono.1.0.0-beta6-12004 Adding /var/root/.dnx/runtimes/dnx-mono.1.0.0-beta6-12004/bin to process PATH Setting alias 'default' to 'dnx-mono.1.0.0-beta6-12004'

Eventually, you now can run and successfully complete

dnvm install latest -r coreclr -u

and write and run your first .NET application on Mac.

Unfortunately, all bits installed are only available for root once you followed the above instructions.