First headline in this morning’s news: Goggle stopps Google Reader (please bear in mind, this link won’t work anymore in the future). Google wants to power down the Google Reader among other APIs and services since the 2011 spring clean. Personally, I am affected the third time by Google’s cut downs after the Feedburner burnout last year.

First headline in this morning’s news: Goggle stopps Google Reader (please bear in mind, this link won’t work anymore in the future). Google wants to power down the Google Reader among other APIs and services since the 2011 spring clean. Personally, I am affected the third time by Google’s cut downs after the Feedburner burnout last year.

While I was annoyed in the very first moment, I had to think through various perspectives, not just coming up with yet another rant post about Google’s attitudes.

The Business Point of View

Google is not doing anything wrong (I guess) from a business point of view. They simply cut down projects, teams or cost centers with no or little revenue. I have seen this several times during my time at Microsoft where teams or studios where shut down due to a revenue not meeting the expectations. Larry Page wanted to focus on core products and less speculative projects which does make sense considering the shareholders beyond Google. Consequently, cutting down free services not being paid for, requiring manpower for development an maintenance and (not to underestimate) bare metal down in Google’s data centers is a plan to increase revenues, cut down losses and save not to spend money.

The User ‘s Point of View

As a user, you might rely on these services. Maybe you build up your website based on various Google APIs (as they have been free), you maintained you RSS feed in Google Reader and so on. Even with several weeks of notice, you need to change technologies, maybe rebuild or recode you page, and even worse to change habits. At some point in time, after this happened one, two or three times (depending on your very personal potential to suffer).

The Developer’s Point of View

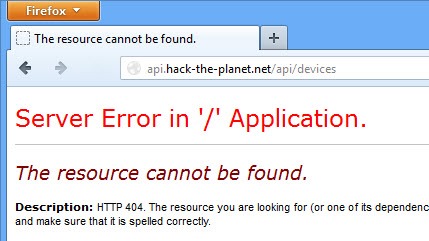

There are quite many apps, tools and pages out there heavily depending or based on Google’s API including Google Reader. Not only their apps and tools stop working, also users who bought these products will be forced to stop using these tools. With feedly, there is timely an alternative Reader and with Normandy developers get an API they might use for their products. However, Nick Bradbury already announced to stop working on the Windows client FeedDemon which heavily depends on the Google API for synchronization.More will definitely follow…

The Consequences

As developer, I was affected once before, as user I am affected the second time by now. By cutting down both services I am left with Google Calendar. While Google might or might not continue this service in the future, one might rethink if using it is a good choice. Keep in mind, we do not pay for it as users and the Google App Sync meanwhile is only available for business users (probably paying for it). Google Calendar Sync was a great tool to sync between Outlook and Google Calendar. I fought my way through the setup using Windows 7 three years ago right after they stopped development for it.

There is already an petition for keeping Google Reader alive, supported by more than 35,000 users (nothing compared to he 10 Mio user susing G+ stated by Larry Page). Still chances that Google will continue the service are less than probably.

The Business Point of View Revisited

I wonder if Google thought of charging for these services. I wonder if one (e.g. I) would pay for such a service. It definitely would depend on the amount they would charge. A few bucks a year won’t hurt and with a few ten thousands of users they might pay the bills for this service one might think. On the other hand, a company like Google might not be interested in any service with less than ten million $$$ of revenue (please put in whatever amount you think is suitable) or a million of users…