In a previous post, I told about he first steps in virtualizing a Windows Server 2008. In this article I describe how to proceed after, the request for my own RIPE subnet was approved. Now I can concentrate on the next point: Installing VMware. Since I want to set up this machine for visualization, I have to perform a few steps first. That way, this post will be mostly about my fight with Debian Linux which is the host system.

After logging in, I just realize that updating the package database might be not the worst idea. Consequently, I do so and install some Norton Commander like tool for real men

apt-get update

apt-get install mc

This actually makes things much easier.

Now, I have to activate IP forwarding in /etc/sysctl.conf by the adding

net.ipv4.ip_forward=1

and bringing the additional IP on the host system by adding

up ip add 192.168.1.1/29 dev eth0

to /etc/network/interfaces. Additionally, I have to add some host-route (by using my gateway 192.168.0.1) so my new subnet is reachable by adding

pointopoint 192.168.0.1

to eth0 in /etc/network/interfaces. Installing iproute by a

apt-get install iproute

restarting the interface by calling

/etc/init.d/network restart

finally makes my IP ping-able. Quite a fight so far if you don’t do this on a regular base. Additionally I installed the powersave package and reconfigured several settings to increase the performance in /etc/powersave.

I just got the tip to put my virtual machines to the separate disc. Since I have one spare 400GB disc. I have to create some partition and to format it.

cfdisk /dev/sdb mkfs -t ext3 /dev/sdb1

Let’s create some directory for the virtual machines and mount the disc

mkdir VMs

mount -t ext3 /dev/sdb1 /Vms

Now some final tweak at /etc/fstab by adding

/dev/sdb1 /VMs et3 defaults 0 0

and I am done.

Finally I start installing the VM. I was pointed to some German How-To written by Till Brehm which is includes quite detailed instructions.

Some prerequisites are required before I start. I do the required 220 MB update by

apt-get install linux-headers-`uname -r` libx11-6 libx11-dev x-window-system-core x-window-system xspecs libxtst6 psmisc build-essential

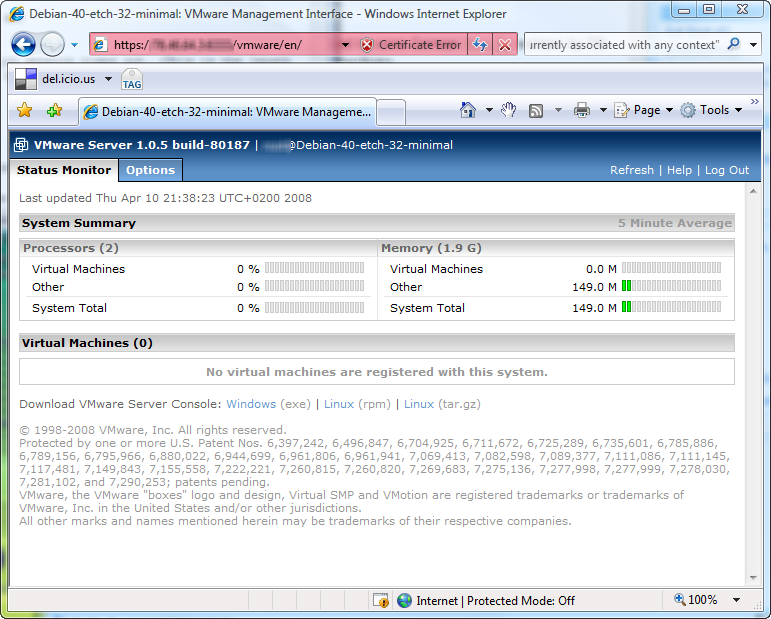

VMware can be downloaded from http://www.vmware.com/download/server/. I skip the management console since I will use it on my Windows workstation and focus only on the server and management interface binaries using:

wget http://download3.vmware.com/... tar xvz VMware-server-*.tar.gz cd vmware-server-distrib ./vmware-install.pl

Now, I simply accept the defaults for the following installation. only at one point I had to tell the script that my virtual machines will be located at /VMs. No I have to continuing with the management interface

tar xvfz VMware-mui-*.tar.gz

cd vmware-mui-distrib

./vmware-install.pl

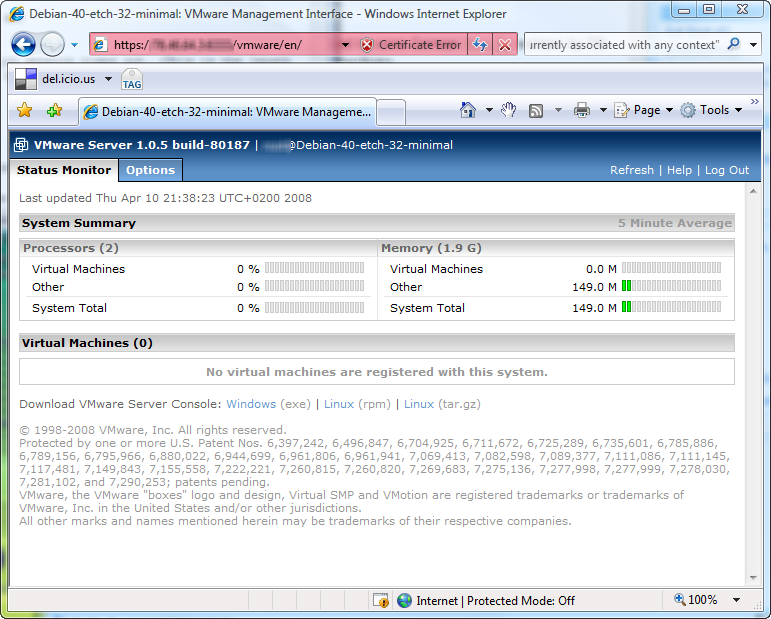

The Web-based management interface seems to work perfectly after installing.

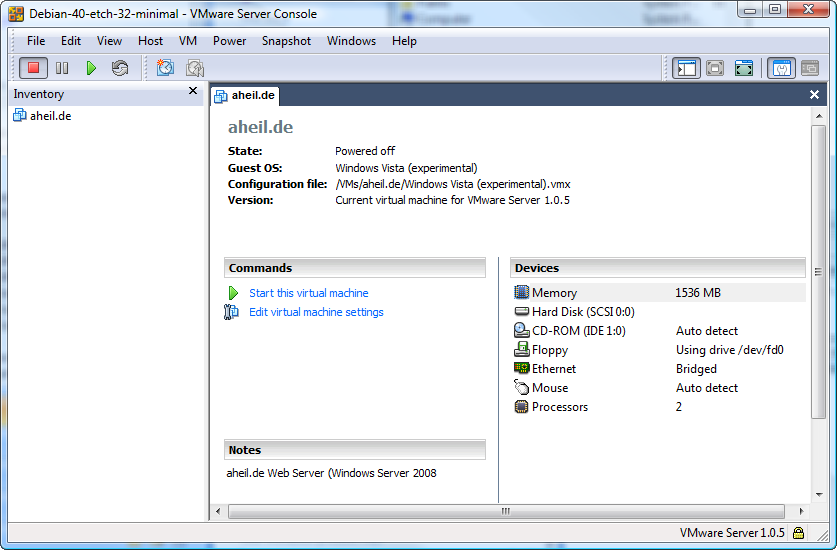

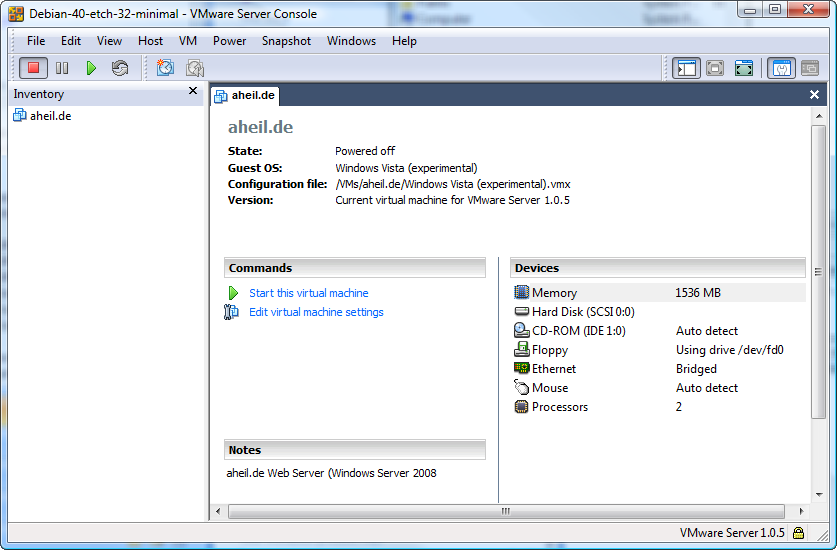

After installing the management console on Windows I run into some trouble. During compilation of the corresponding modules, the VMware script was not able to start the inetd service. Therefore, I was not able to connect to the VMware server. After restarting the service manually it worked perfectly and I set up my virtual machine.

Now, I have to copy the installation files for the Windows Server 2008.